Credentials

Before starting, you need to add credentials so Mage can execute your SQL

commands.

Follow the steps for the database or data warehouse of your choice:

Add SQL block to pipeline

- Create a new pipeline or open an existing pipeline.

- Add a data loader, transformer, or data exporter block.

- Select

SQL.

There are 4 - 5 fields that must be configured for each SQL block:

| Field | Required | Description |

|---|

| Data provider | Yes | The database or data warehouse you want to execute your SQL commands in. |

| Profile | Yes | When you add your credentials to the io_config.yaml file, you added them under a key. That key is called the profile. Choose which set of credentials you want this SQL block to use. For detailed information about setting up profiles, see the IO Config Setup documentation. |

| Use raw SQL | No | You can write raw SQL and it’ll be executed as written in your data provider. |

| Database | Depends on data provider | Some data warehouses require that we explicitly state the name of the database we want to write to. If this is present, it’s required. The name of the table that is created follows this convention: [database].[schema].[pipeline UUID]_[block UUID]. |

| Schema to save | Yes | Every SQL block will save data to your data provider. The name of the table that is created follows this convention: [schema].[pipeline UUID]_[block UUID]. |

| Table | No | SQL blocks will automatically name your table for you using a naming convention (see the section Automatic naming of tables for more information). You can override this automatic naming convention by filling in a value in this field. |

| Write policy | Yes | How do you want to handle existing data with the same database, schema, and table name? See below for more information. |

Write policies

| Policy | Description |

|---|

| Append | Add rows to the existing table. |

| Replace | Delete the existing data. |

| Fail | Raise an error during execution. |

YAML configuration

You can also modify block configuration in pipeline’s metadata.yaml file. Each block has a configuration field.

Example configuration

configuration:

data_provider: sqlserver

data_provider_profile: default

data_provider_schema: ''

export_write_policy: append

limit: 1000

limit_in_pipeline_run: 1

use_raw_sql: false

| Field | Required | Description |

|---|

| limit | No | The maximum number of rows to return in notebook. |

| limit_in_pipeline_run | No | The maximum number of rows to return when running the block in the pipeline run. |

Dynamic data_provider_profile

You can configure the data_provider_profile in a SQL block to resolve dynamically at runtime using Mage variable syntax.

This is done by modifying the pipeline’s metadata.yaml directly.

Example:

configuration:

data_provider: snowflake

data_provider_profile: '{{ variables("DB_PROFILE") }}'

data_provider_schema: analytics

export_write_policy: append

Automatically created tables

Each SQL block will create a table in the data provider of your choice.

When you run a block, it’ll execute your SQL command, then store the results in

a table created in your database or data warehouse.

Using raw SQL

If you’re using version 0.9.69 or less and interpolating a SQL command from an upstream block that uses raw SQL:

if the downstream block’s SQL command is referencing the upstream block using a variable such as {{ df_1 }} and ends the statement with a semi-colon (;), there is an interpolation error.

To work around the error, remove the semi-colon ;.

A fix will be available in 0.9.70.

CREATE TABLE command and the

INSERT command.

For example, if a table already exists then you can write the INSERT statement:

INSERT INTO mage.users

SELECT 1 AS id, 'Urza' AS username;

CREATE TABLE statement

and the INSERT statement:

CREATE TABLE IF NOT EXISTS mage.users (

id BIGINT

, username VARCHAR(255)

);

WITH users AS (

SELECT

1 AS id

, 'Urza' AS username

)

INSERT INTO mage.users

SELECT

*

FROM users;

mage.users with 2 columns: id as a BIGINT

and username as a VARCHAR(255).

Then, it’ll insert a single row into that table.

Required SQL statements

When writing raw SQL, you must use at least 1 of the following statements:

SELECT

INSERT

INSERT INTO some_table

SELECT 1;

CREATE TABLE

CREATE TABLE some_table (id BIGINT);

DROP TABLE

UPDATE

UPDATE some_table

SET col1 = 'value1'

WHERE col2 = 'value2'

Multiple SQL statements

You can execute multiple SQL statements in a SQL block. Separate your SQL statements using a semi-colon (;).

Automatic naming of tables

If you don’t choose the setting for using raw SQL,

the name of this automatically created table follows these conventions:

- If

Database field is configured:

[database].[schema].[pipeline UUID]_[block UUID]

- If no

Database field is configured: [schema].[pipeline UUID]_[block UUID]

Where pipeline UUID is the name of the current pipeline you’re editing.

Where block UUID is the name of the SQL block you are running.

Upstream blocks

If your SQL block depends on upstream blocks that aren’t SQL blocks (e.g. Python

code blocks), then those blocks will also automatically create tables.

The name of those tables follows the same naming convention mentioned above.

Variables

All SQL blocks have the following variables they can access in their query:

{{ execution_date }}

The date and time the block is run.

Example

SELECT '{{ execution_date }}' AS today

today |

|---|

2022-09-24 23:01:08.376057 |

If a SQL block has 1 or more upstream blocks, then they have access to their

parent blocks’ output using the following variable:

{{ df_1 }}

Depending on how many upstream blocks there are, the variable name changes. For

example, if there are 3 upstream blocks then there are 3 variables that can be

accessed:

{{ df_1 }}{{ df_2 }}{{ df_3 }}

The SQL block UI will display which variable maps to which upstream block. By

convention, the 1st added upstream block will be {{ df_1 }}, and every

upstream block added after that will have an incrementing number in the variable

name after the prefix df_.

Example

SELECT a.id , b.username FROM {{ df_1 }} AS a LEFT JOIN {{ df_2 }} AS b ON

a.id = b.user_id LIMIT 1

id | username |

|---|

1 | Sorcerer supreme |

Variable interpolation

Available in versions >= 0.9.59

- Upstream block output

- Variables

- Global variables

- Pipeline variables

- Runtime variables

- Environment variables

Upstream block output

Use the data from 1 or more upstream block’s output by using the block_output function.

block_uuid

The UUID of the upstream block to get data from.

If argument isn’t present, data from all upstream blocks will be fetched.

| |

|---|

| Optional | ✅ |

| Type | str |

| Example | 'data_loader_block' |

parse

A lambda function to parse the data from an upstream block’s output.

If the parse argument isn’t present, then the fetched data from the upstream block’s output

will be interpolated as is.

| |

|---|

| Optional | ✅ |

| Type | function |

| Example | lambda data, variables: data['runtime'] * variables['tries'] |

-

data

If the block_uuid argument isn’t present, then the 1st argument in the lambda function is

a list of objects.

The list of objects contain the data from an upstream block’s output.

The positional order of the data in the list corresponds to the current block’s upstream blocks

order.

For example, if the current block has the following upstream blocks with the following output:

load_api_data: [1, 2, 3]load_users_data: { 'mage': 'powerful' }

Then the 1st argument in the lambda function will be the following list:

[

[1, 2, 3],

{ 'mage': 'powerful' },

]

| |

|---|

| Optional | ❌ |

| Type | If block_uuid argument is present, then the type depends on the output from that block. If block_uuid isn’t present, then the type is list. |

| Example | { 'mage': 'powerful' } |

-

variables

A dictionary containing pipeline variables and runtime variables.

| |

|---|

| Optional | ❌ |

| Type | dict |

| Example | { 'fire': 40 } |

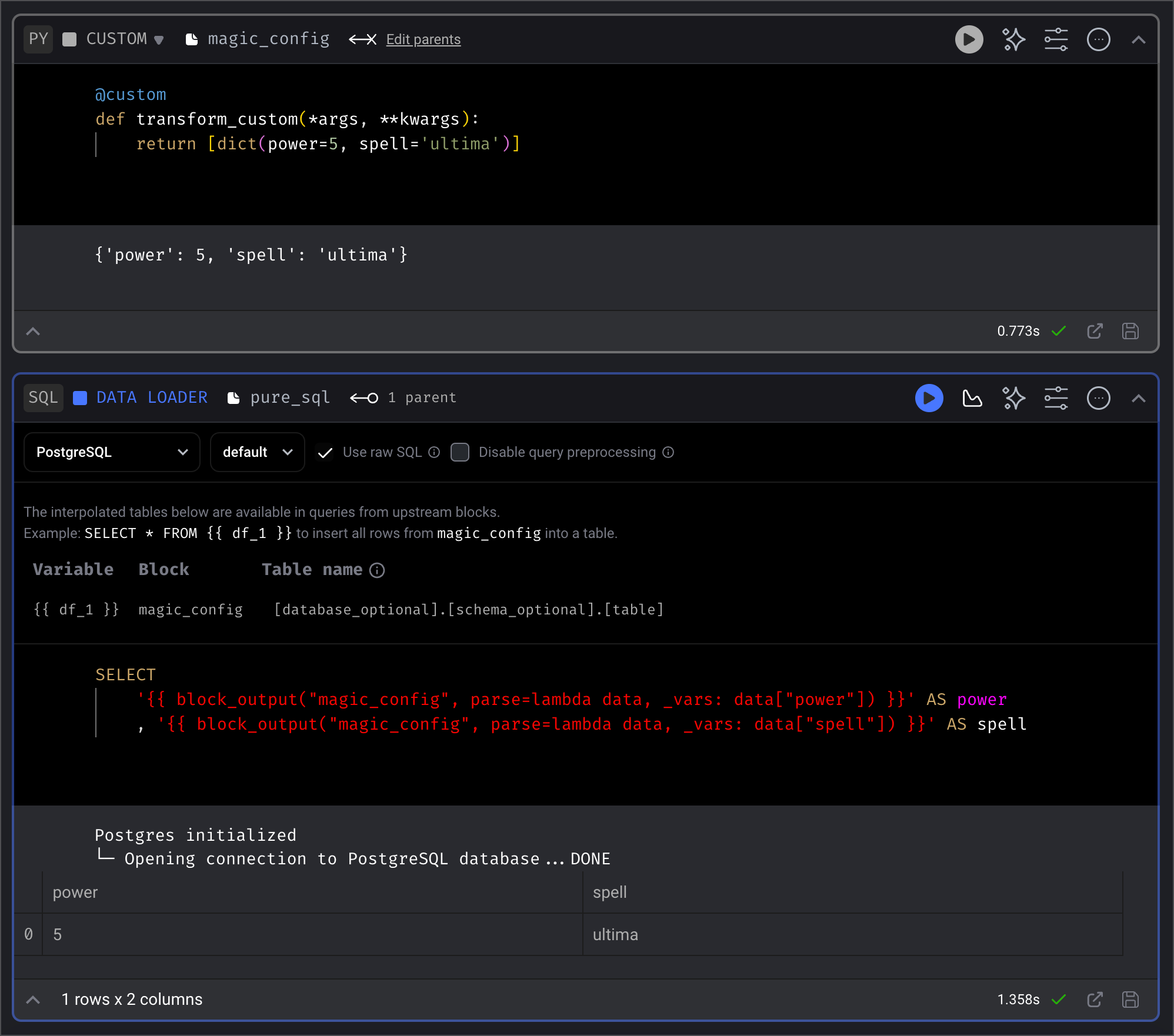

Example

With block_uuid

SELECT

'{{ block_output("magic_config", parse=lambda data, _vars: data["power"]) }}' AS power

, '{{ block_output("magic_config", parse=lambda data, _vars: data["spell"]) }}' AS spell

FROM mage.core_spells

SELECT

'{{ block_output("data_loader_block", parse=lambda data, variables: data["runtime"] * variables["tries"]) }}' AS data

FROM mage.core_spells

SELECT

'{{ block_output("load_recent_user", parse=lambda user, _variables: user["id"]) }}'

FROM mage.core_spells

block_uuid

SELECT

'{{ block_output(parse=lambda data, _vars: data[0]["power"]) }}' AS power

, '{{ block_output(parse=lambda data, _vars: data[0]["spell"]) }}' AS spell

FROM mage.core_spells

SELECT

'{{ block_output(parse=lambda data, variables: data[0]["runtime"] * variables[0]["tries"]) }}' AS data

FROM mage.core_spells

SELECT

'{{ block_output(parse=lambda user, _variables: user[0]["id"]) }}'

FROM mage.core_spells

Variables

Interpolate values from a dictionary containing keys and values from:

- Global variables

- Pipeline variables

- Runtime variables

Example

SELECT

'{{ variables("mana") }}' AS mana

FROM mage.dual_lands

Environment variables

Interpolate values from the environment variables.

Example

SELECT

'{{ env_var("ALLOW_POWER_9") }}' AS vintage

FROM mage.mox

Executing pure SQL

Available in versions >= 0.9.59