- Overview

- Building Transformer Actions

- Transformer Action Reference

- Appendix: Filter Syntax

- Appendix: Column Types

Tutorial

To introduce how the Transformer Actions library works, we will perform some cleaning actions on the Titanic Survival dataset. Open the Mage app and create a new pipeline. Add a data loader with the following code to load the Titanic Survival dataset: You should see the following code in your new transformer block:

You should see the following code in your new transformer block:

build_transformer_action()creates a transformer action payload, which is a dictionary that describes settings for the action to complete. The arguments passed in aredf- the data frame to transform, used to infer column typesaction_type- specifies the action to perform. In this case, we are performing anIMPUTEaction.arguments- specifies the columns to perform the action on. Currently, the action is configured to impute all columns of the data frame. Since we only want to transform “Cabin”, “Age”, and “Embarked”, we will update this entry to only contain these column names.axis- specifies which axis to perform the action on. Imputing values is done on a per column basis, so this is a column transformation.options- these are extra settings to specify for this action. For an impute transformation, the main option to specify is'strategy', dictating the imputation strategy to use. See Fill in Missing Values for details on the valid imputation strategies. For now we will useMODE, which specifies to fill empty values with the most frequent value per column.

build_transformer_action(), the payload is used to construct the BaseAction object, representing a reusable instance of the transformation. Then the transformation is executed on the input data frame using execute(), returning the transformed data frame when done.

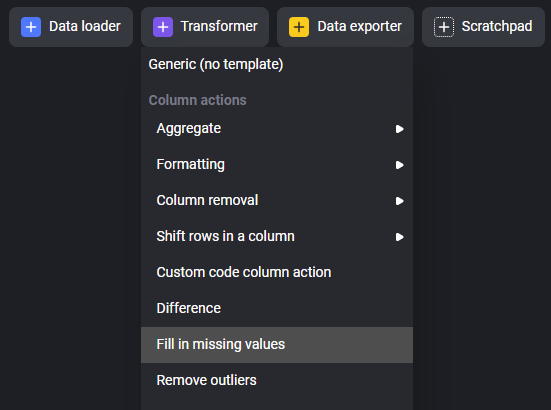

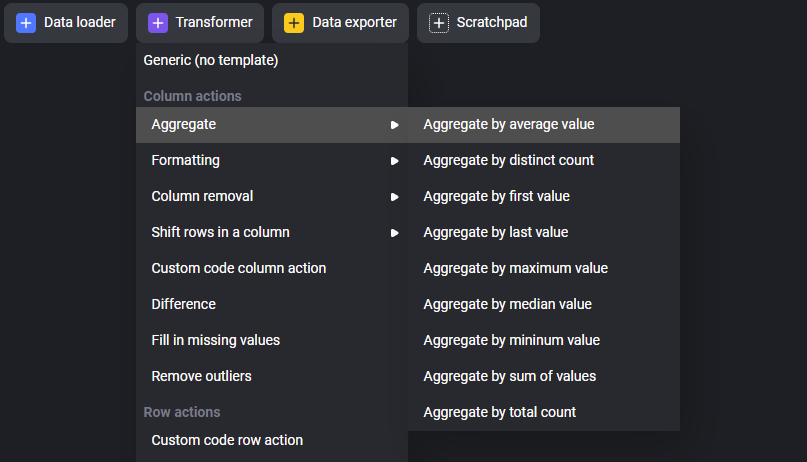

Suppose then you’re interested in computing the average fare and age by each location of embarkation - Southampton (S), Cherbourg (C), and Queenstown (Q). Transformer Actions can help us here as well - create a new transformer using the “Aggregate/Aggregate by average value” template.

You should see the following code in your new transformer block.

You should see the following code in your new transformer block.

action_codeis used by aggregation-type transformer actions to filter rows of the dataset before performing the aggregation. For example, if the “Embarked” column had null values, we could filter them out using the filter'Embarked != null'. In this case we won’t perform any filtering. See Filter Syntax for more details on valid filter syntax.- Since we want to aggregate over “Fare” and “Age”, our arguments are

['Fare', 'Age'] - In

options, the only parameter isgroupby_columnswhich is the list of the columns to group by when performing the aggregation. In this case, the column we want to use to form groups is “Embarked” outputsis used to specify the name and type of the new columns created by the aggregation. As there are two columns inargumentsthat we want to perform the aggregation over, there should be two corresponding entries inoutputs.

- Identify a transformation that may need to be made to your data

- Create a transformer using the transformer action that corresponds to your template

- Fill in the parameters for the transformer action to build your transformer action payload

- Build a

BaseActionobject using the payload and execute the action to get the transformed data frame

Core Concepts

The core object of any transformer action is the payload, a JSON object that describes the transformation to perform alongside settings for that transformation. A sample transformer action payload is shown below:32. Here is a list of the keys used in transformer action payloads:

| Key | Description |

|---|---|

| axis | Axis to perform the transformation on. Either ‘row’ or ‘column’. |

| action_type | Type of action to perform. See Transformer Action Reference for the list of action types. |

| action_code | Text field for specifying any code such as filters. |

| action_arguments | The columns to perform the transformation on. |

| action_options | Other settings to provide to the transformer action. See Transformer Action Reference for specific details on which actions require which options. |

| action_variables | Variable metadata for each input argument (column). Specifies column name and type. |

| outputs | Variable metadata for each output column. Specifies column name and type. See Column Types for information on possible column types to specify. |

build_transformer_action factory to generate the payload. The above snippet generates the same payload as above:

build_transformer_action.

The transformer action payload, once built, can be used to construct a BaseAction object. This object processes the payload to identify the transformations to apply a data frame. Through this action object, the transformer action can be applied to a data frame using the execute function:

Building Transformer Actions

build_transformer_action -build_transformer_action(df: DataFrame, action_type: ActionType | str, arguments: List[str], action_code: str, options: Dict, axis: Axis | str, outputs: List[Dict]) -> Dict:

Source: mage_ai.data_cleaner.transformer_actions.utils.build_transformer_action

Builds transformer action payload from arguments. The output of this function can be passed

as input to transformer_actions.base.BaseAction in order to perform the requested transformation.

Note: Action variables are inferred from arguments and df.

- Args:

df (DataFrame): The data frame to build a transformer action payload for.action_type (ActionType | str): Transformer action to perform.arguments (List[str], optional): Columns to perform this action on. Defaults to[].action_code (str, optional): Special code or query to execute with action. Defaults to''.options (Dict, optional): Options specifying behavior of action. Defaults to{}.axis (Union[Axis, str], optional): Axis of the data frame to apply the action to. Defaults toAxis.COLUMN.outputs (List[Dict], optional): Specifies metadata of newly created columns. Defaults to[].

- Returns: (

Dict) Transformer action payload to use withtransformer_actions.base.BaseAction - Example:

Transformer Action Reference

- Building Transformer Actions

- Transformer Action Reference

- Column Actions

- Row Actions

- Appendix: Filter Syntax

- Appendix: Column Types

Column Actions

Aggregation Actions

Applies some aggregation function over groups determined by values in some columns. A set of grouping columns are provided, whose unique combinations of values are used to form groups. For example, consider the dataset below:| Paid Dues | Due Amount |

|---|---|

| Yes | $5.00 |

| No | $15.20 |

| Yes | $3.50 |

| Yes | $1.28 |

| No | $25.03 |

| Paid Dues | Due Amount |

|---|---|

| Yes | $5.00 |

| Yes | $3.50 |

| Yes | $1.28 |

| Paid Dues | Due Amount |

|---|---|

| No | $15.20 |

| No | $25.03 |

| Paid Dues | Total Due Amount |

|---|---|

| Yes | $9.78 |

| No | $40.23 |

| Paid Dues | Due Amount | Total Due Amount by Payment Status |

|---|---|---|

| Yes | $5.00 | $9.78 |

| No | $15.20 | $40.23 |

| Yes | $3.50 | $9.78 |

| Yes | $1.28 | $9.78 |

| No | $25.03 | $40.23 |

build_transformer_action, but have different types corresponding to the different aggregation functions that could be applied to the groups.

Example

- action_code: Filter for selecting rows before aggregation is performed. See Filter Syntax for more information on valid filter syntax.

- arguments: Columns to compute aggregate values over. For each argument a new column will be created to store the aggregates values over the column.

- If no arguments are provided, no aggregation is performed

- options:

groupby_columns(optional) - list of columns to form groups with when aggregating. A group consists of each unique combination of values chosen from each of the columns specified in this option.- If this is empty, aggregation is performed over the first column specified in arguments (only if arguments is nonempty - if empty no aggregation is performed) and only one column is added

timestamp_feature_a(optional) - time feature A (a column in the data frame)timestamp_feature_b(optional) - time feature B (a column in the data frame)window(optional) - max time window between feature A and feature B in seconds

- outputs: Specifies the metadata for each output column of the aggregation (corresponding to the aggregation created from input argument in arguments). This array must be the same length as arguments, and each entry must contain the following metadata:

uuid (string): Name of the new columncolumn_type (string | ColumnType): Type of the new column ( Seemage_ai.data_cleaner.column_types.constants.ColumnTypefor options)

build_transformer_action.

| Name | Action Type Enum | Description |

|---|---|---|

| Average | ActionType.AVERAGE | Averages input column value over each group |

| Count Distinct | ActionType.COUNT_DISTINCT | Counts the number of distinct rows in each group per input column |

| Count | ActionType.COUNT | Counts the number of rows in each group per input column |

| First | ActionType.FIRST | For each input column, selects first item in each group (as appearing in the order of the original data frame) |

| Last | ActionType.LAST | For each input column, selects last item in each group (as appearing in the order of the original data frame) |

| Maximum | ActionType.MAX | Gets the maximum value per group for each input column |

| Median | ActionType.MEDIAN | Gets the median value per group for each input column |

| Minimum | ActionType.MIN | Gets the maximum value per group for each input column |

| Sum | ActionType.SUM | Sums over all values in each group per input column |

Formatting Actions

These transformer actions involve reformatting column names or the values in columnsClean Column Names

Cleans column names according to the following rules:- Names are converted to snake case

- All wrapping whitespace or underscores are removed

- All non-alphanumeric characters (except ”_”) are removed

- Numbers are prefixed with “number_”

- All Python keywords are postfixed by ”_”

- all characters are lower case

- no whitespace is used (which makes referring to columns in code easier)

| Original Name | Cleaned Name | Notes |

|---|---|---|

| good_name | good_name | columns in snake case are left unchanged |

| bad case | bad_case | |

| %@#342%34@@#342 | number_34234342 | Symbols are removed first, then number is cleaned |

| PascalCaseColumn | pascal_case_column | |

| camelCaseText | camel_case_text | |

| ___snake_case___ | snake_case | Surrounding underscores are stripped |

- arguments: Columns whose name to clean. If empty, no columns names are cleaned.

Fix Syntax Errors

Marks syntax errors in column values. Syntax errors are defined as values that are improperly formatted or of the incorrect type. For example:- A number in a text column

- An improperly formatted email, phone number, or zip code

- An improperly formatted date

"invalid"if column is of categorical or string typepandas.NaTif column is a datetimenp.nanif column is a number type

- arguments: Columns to mark syntax errors for. If empty no columns are checked.

Reformat Values

Reformats values in column based on requested action. The currently supported reformats are:- Standardize capitalization (

reformat = 'caps_standardization'): Forces text column to follow a single capitalization strategy (either lowercase or uppercase) - Convert currencies to a number (

reformat = 'currency_to_num'): Converts a currency value (by default stored as a string) to a decimal number. If unable to convert, doesn’t perform conversion- Supported currencies: $, CAD, £, €, ¥, Rs, 元

Original String Parsed Decimal Notes ” $ 10000” 10000 ”- ¥ 22.324523” -22.324523 Negation in front of symbol supported ” 10000 元 “ 10000 Currencies with postfixed symbols are supported ”0.42 €“ 0.42 ”- 3.42032 CAD” -3.42032 ”Rs - 100000.23” -100000.23 Negation after symbol is supported

- Supported currencies: $, CAD, £, €, ¥, Rs, 元

- Convert string to datetime (

reformat = 'date_time_conversion'): Converts a string value representing a datetime to apandas.Timestampobject if correctly formatted, else converts toNone. - Trim whitespace (

reformat = 'trim'): Removes leading and trailing whitespace from text columns.

- arguments: Columns to apply the reformat action to

- options:

reformat(optional): The reformat action to apply to the specified columns. Can be:None- no reformat is applied'caps_standardization'- standardize capitalization'currency_to_num'- convert currency string to number'date_format_conversion'- convert datetime string topandas.Timestamp'trim'- remove leading and trailing whitespace from text columns

capitalization(optional): Specifies the capitalization strategy to use when standardizing capitalization. This argument is ignored unlessreformat = "caps_standardization". Options are['lowercase', 'uppercase'].

Column Removal Actions

Select Columns

Keeps only the specified columns; removes all other columns from data frame. Example:- arguments: The columns to keep in the data frame. If empty, all columns are removed.

Remove Columns

Drops the specified columns. Example:- arguments: The columns to remove in the data frame. If empty, no columns are removed.

Row Shifting Actions

These actions copy a column with all rows shifted up or down by a certain amount. When rows are shifted, one or more values are deleted and some new values have to be filled in. The fill-in data types are:| Type | Fill Value |

|---|---|

| Numeric | np.nan |

| Datetime | pandas.NaT |

| Other Types | None |

Shift Up

Shifts all rows in a column up by one. The first entry is deleted and the last entry is turned to a null type. Example:- arguments: The column to shift up. At most one column can be shifted at once:

- If multiple columns are provided as arguments, only the first is shifted up

- If no columns are provided then no shifting is performed

- outputs: Metadata for the newly added upshifted column. Must have at most a single entry containing the following information:

uuid: Name of the new upshifted columntype: Data type of the new upshifted column

Shift Down

Shifts all rows up by periods rows. The last periods entries are deleted and the first periods are converted to a null type. Example:- arguments: The column to shift down. At most one column can be shifted at once:

- If multiple columns are provided as arguments, only the first is shifted down

- If no columns are provided then no shifting is performed

- options:

periods(optional): Specifies how many rows to shift down. Defaults to 1.groupby_columns(optional): Specifies columns to group by. If specified, shifts each group by periods rows instead of the entire data frame. The resulting column is then returned. If unspecified no grouping is performed. Multiple columns can be specified to group by.

- outputs: Metadata for the newly added downshifted column. Must have at most a single entry containing the following information:

uuid: Name of the new downshifted columntype: Data type of the new downshifted column

Feature Scaling Actions

These actions are used to normalize the range of independent variables or features of data. These actions are generally performed during the data preprocessing step.| Type | Formula |

|---|---|

| Normalization | (X-Xmin)/(Xmax-Xmin) |

| Standardization | (X-Xmean)/Xstd |

Normalize Data

Perform Normalization on the desired columns of a dataset. Example:- arguments: The columns to Normalize.

- If no columns are provided then no Normalization is performed

Standardize Data

Perform Standardization on the desired columns of a dataset. Example:- arguments: The columns to Standardize.

- If no columns are provided then no Standardization is performed

Other Column Actions

Difference

Adds a new column where each row is the difference between every consecutive two rows in the original column. Example:- arguments: The column to calculate difference on. At most one column can be specified:

- If multiple columns are provided as arguments, only the first is used in calculated

- If no columns are provided then no calculation

- outputs: Metadata for the newly added difference column. Must have at most a single entry containing the following information:

uuid: Name of the difference columntype: Data type of the difference column (most often will be a number)

Fill In Missing Values

Imputes missing values using different strategies:| Strategy | Description |

|---|---|

| Average | Fill missing values with the average per column. |

| Column | Fill missing values with values from another column. |

| Constant | Fill missing values with a placeholder constant. Can fill with a specific value; otherwise a default placeholder is chosen by column type. |

| Median | Fill missing values with the median per column. |

| Mode | Fill missing values with the most frequent value per column. |

| Random | Fill missing values with a randomly sampled non-null value from same column. |

| Sequential | Fill missing values with the previous row. Should only be used with timeseries columns. |

- arguments: The columns to impute (fill in the missing values).

- options:

strategy(required): Specifies strategy to use. See table above or enumImputationStrategyvalue(optional): Value to fill in missing value with. This argument is ignored unlessstrategy = 'constant', in which case the constant value used to impute isvalue.timeseries_cols = [](optional): Columns which specify the time series in the data frame. This argument is ignored unlessstrategy = 'sequential', in which case the data frame is sorted along these columns to sequentially impute data in the order of the time series.

Remove Outliers

Removes multidimensional outliers in the data frame by analyzing numerical columns. If there are no numerical columns, no check is performed. Null values are ignored when checking for outliers. The following methods are supported for detecting outliers:| Method | Description |

|---|---|

'itree' | Uses the Isolation Tree algorithm. Number of trees is set to 100. |

'lof' | Uses the Local Outlier Factor algorithm. Number of neighbors for computing local density scales with amount of data and contamination rate. |

'auto' | Automatically determines from above methods based on number of data points and dimensionality of the data. |

- The contamination rate for both algorithms is estimated via the per-dimension IQR (which discounts multidimensional interactions but still estimates how many outliers there likely are)

- If the input data has more than 20 dimensions, the dimensionality is reduced using PCA

- arguments: The set of columns to consider when checking for outliers. Of these columns, only numerical columns will be used to calculate outliers.

- options:

-

method(optional): Specifies outlier detection method to use:'itree''lof''auto'

'auto'.

-

Row Actions

These are transformer actions that can be applied to each row of a data frame, and so haveaxis = Axis.ROW.

Drop Duplicates

Drops duplicate rows from the data frame. Example:- arguments: the columns along which comparisons will be made to check for duplicate rows. If empty, all columns will be used to perform comparison.

- options:

-

keep(optional): specifies policy on keeping duplicates:'first'- keeps the first occurrence of the row'last'- keeps the last occurrence of the rowFalse- removes occurrences of duplicates

'last'.

-

Filter

Filters row by boolean condition. Selects all rows that meets the filter condition. Example:- action_code: filter to apply to select rows. See Filter Syntax for more information on valid filter syntax.

Remove Rows

Removes rows specified by their index. Example:- options:

rows: List of the indices corresponding to the rows to remove. If empty, no rows are removed.

Sort

Sorts rows in the specified order. Example:- arguments: the columns whose value to consider while sorting rows. If empty then sort is not performed.

- options:

ascending(optional): Specifies how to sort rows. IfTrue, then rows are sorted in ascending order; ifFalsesorted in descending order. Defaults toTrue.- If sorted in ascending order, null values are placed at the top of the data frame in the same order as they appeared in the original data frame

- If sorted in descending order, null values are placed at the bottom of the data frame in the same order as they appeared in the original data frame

ascendings(optional): A list of booleans specifying if each column specified in arguments should be sorted in ascending order (must be the same length). If specified, this list will take precedence over theascendingoption.

Appendix: Filter Syntax

Valid filters are composed of clauses of the form:- List of valid operations:

==, !=, <=, <, >, >=, contains, not containscontains(andnot contains) checks if the value is a substring (or not a substring) of values in the column specified.

- The right hand side of each clause can either be a literal value (boolean, number, string) or another column to compare to.

and and or operators and grouped with parentheses.

- If column names contain only alphanumeric characters and the special character ’_’, then the raw column name can used to filter code.

Example:

age != null and price_of_item >= 50.23 - If columns contain whitespace, newlines, and some of the following special characters:

+=-*&^%$! ?~|<>(){}[],., then the quoted column name can be used to filter code. Any quote character can be used. Example:("+= -*&^%$! ?~ |<>" == False and ' kas22d fe ($)' > 50.23) or ("dis>>> ??cou nted" contains "partial") - If columns contain any other special characters apart from those listed above, the column cannot be filtered. This is a fundamental limitation of pandas, see here for more information.

Appendix: Column Types

These are list of column types used in the Mage tool. One of these column types should be specified for every entry in the options argument of a transformer action. The enum source ismage_ai.data_cleaner.column_types.constants.ColumnType.

| Type | Enum | String Value | Description |

|---|---|---|---|

| Category | ColumnType.CATEGORY | ”category” | Categorical variable that takes one of some finite set of values. This set has at most 255 entries. |

| High Cardinality Category | ColumnType.CATEGORY_HIGH_CARDINALITY | ”category_high_cardinality” | Categorical variable that takes one of some finite set of values. This set has more than 255 entries. |

| Datetime | ColumnType.DATETIME | ”datetime” | Datetime objects or strings representing a datetime. |

ColumnType.EMAIL | ”email” | Strings that contains emails. | |

| List | ColumnType.LIST | ”list” | Lists of primitives (integers, strings, booleans, floats) of variable length. |

| Number | ColumnType.NUMBER | ”number” | Integer numbers. Can be a Python integer, NumPy integer or a stringified integer. |

| Floating Point Number | ColumnType.NUMBER_WITH_DECIMALS | ”number_with_decimals” | Floating point numbers. Can be a Python float, NumPy float, or a stringified float. |

| Phone Number | ColumnType.PHONE_NUMBER | ”phone_number” | Phone numbers (either in string form or integer form). |

| Text | ColumnType.TEXT | ”text” | Text values, such as sentences or paragraphs. |

| Binary | ColumnType.TRUE_OR_FALSE | ”true_or_false” | A variable that takes on two different values (including booleans). |

| Zip Code | ColumnType.ZIP_CODE | ”zip_code” | Zip codes (either in string form or integer form). |