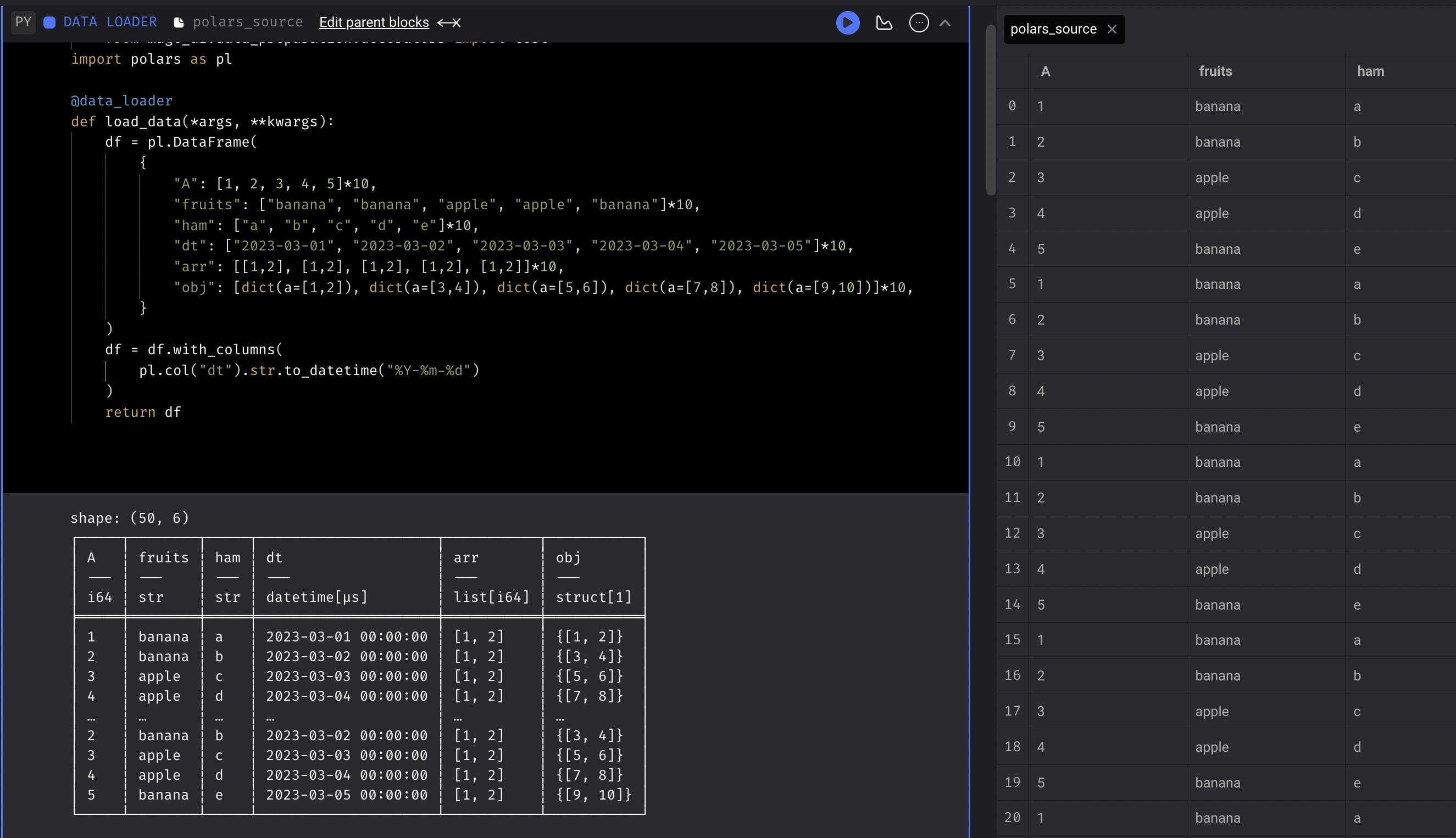

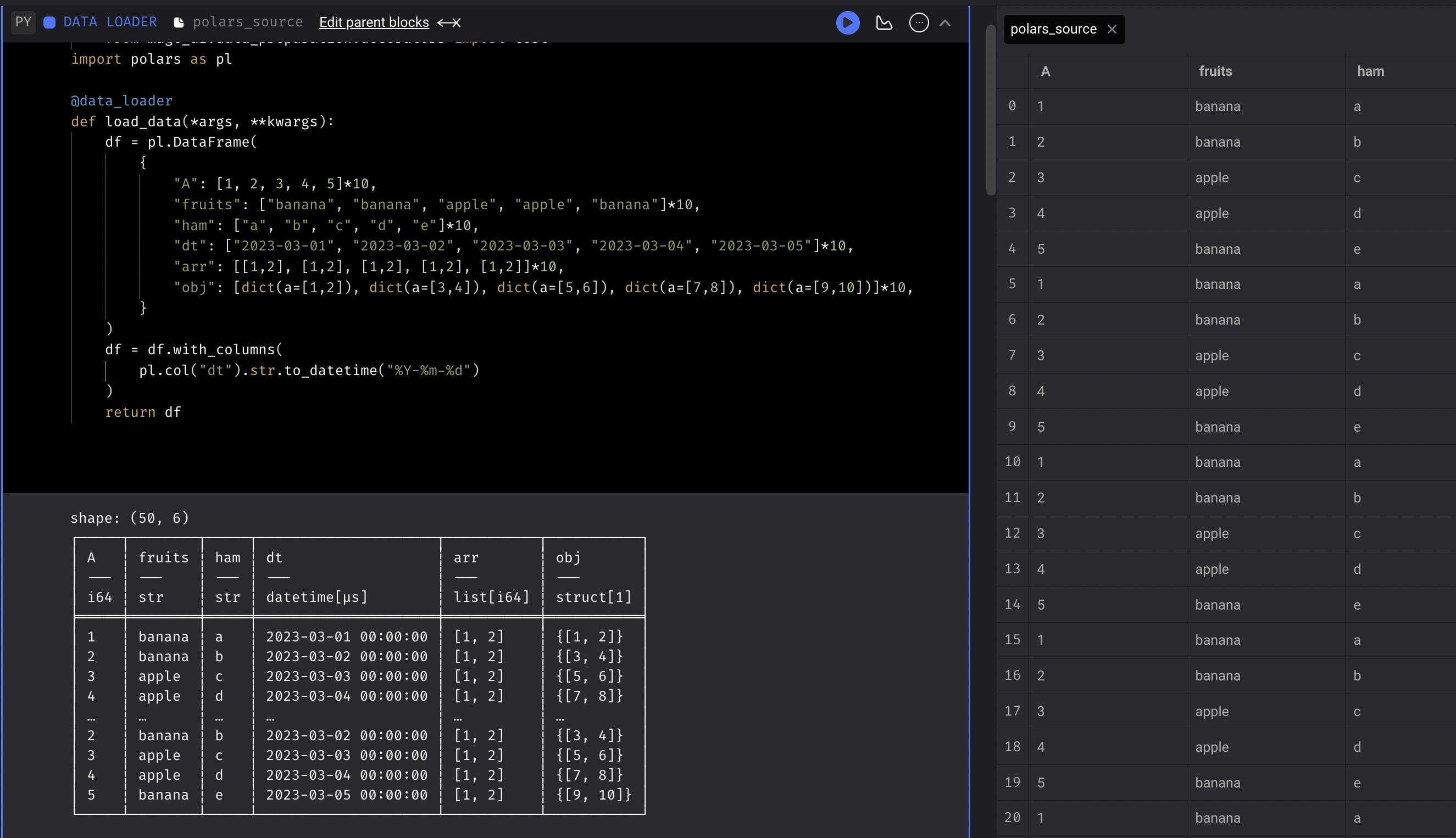

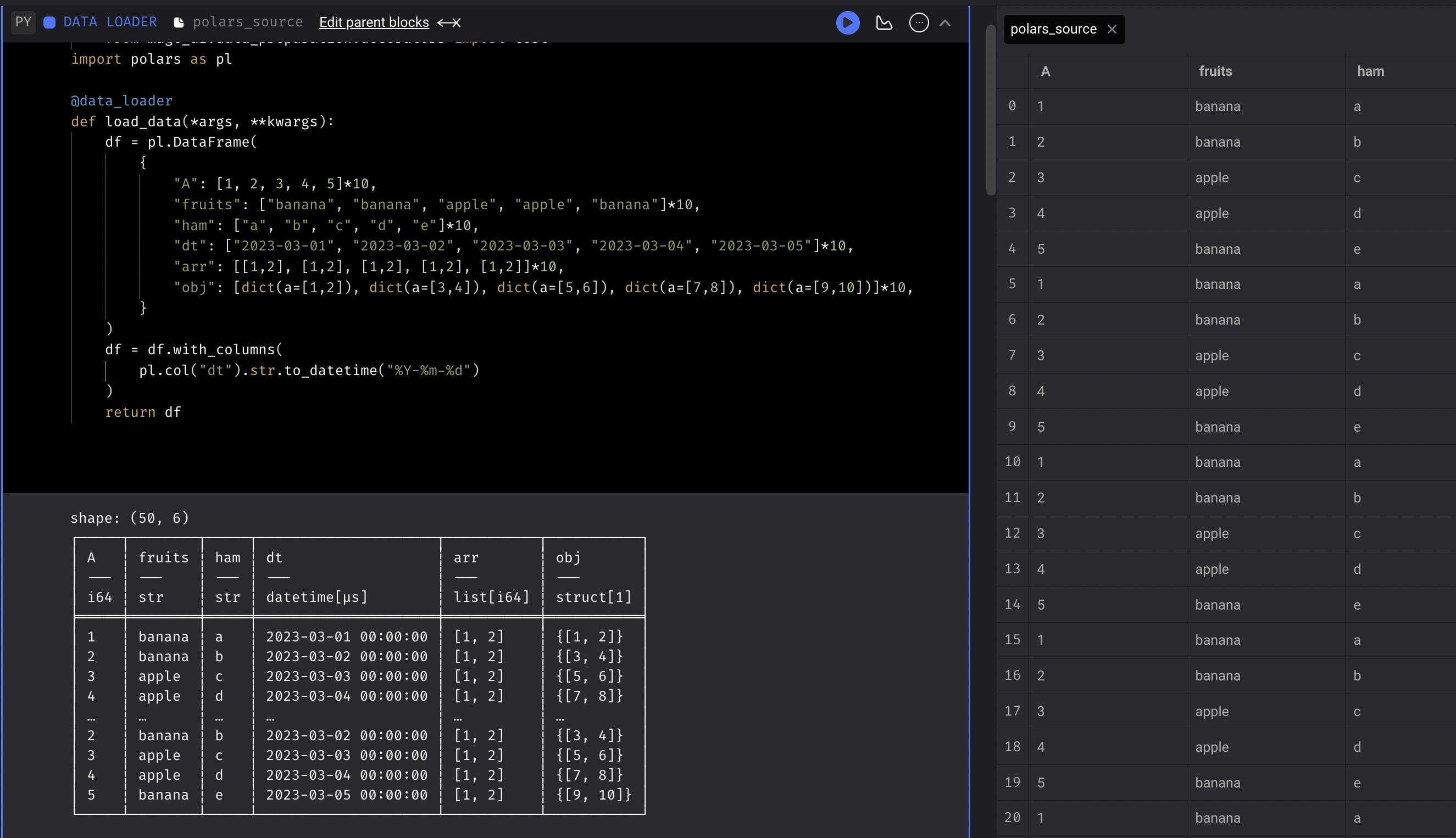

Blocks

Native support for Polars

Serialize and deserialize data using Polars DataFrames.

Mage supports using Polars DataFrame in addition to Pandas DataFrame to process the data.

If you return a Pandas or Polars DataFrame, it’ll be serialized to disk using PyArrow and stored in Parquet format. When a downstream block loads an upstream block’s output data as an input argument, that data will be deserialized back to the data type before it was serialized.

Leveraging Polars and PyArrow in this process improves the memory usage while serializing data to disk and deserializing data from disk by leveraging Polar’s lazy data frames, PyArrow partitions, and Parquet file format features such as accessing metadata and schema information without loading the raw data into memory.

Leveraging Polars and PyArrow in this process improves the memory usage while serializing data to disk and deserializing data from disk by leveraging Polar’s lazy data frames, PyArrow partitions, and Parquet file format features such as accessing metadata and schema information without loading the raw data into memory.