dbt-spark with a pySpark session.

- Build a Mage docker image with Spark following the instructions given at Build Mage docker image with Spark environment.

- Run the following command in your terminal to start Mage using docker:

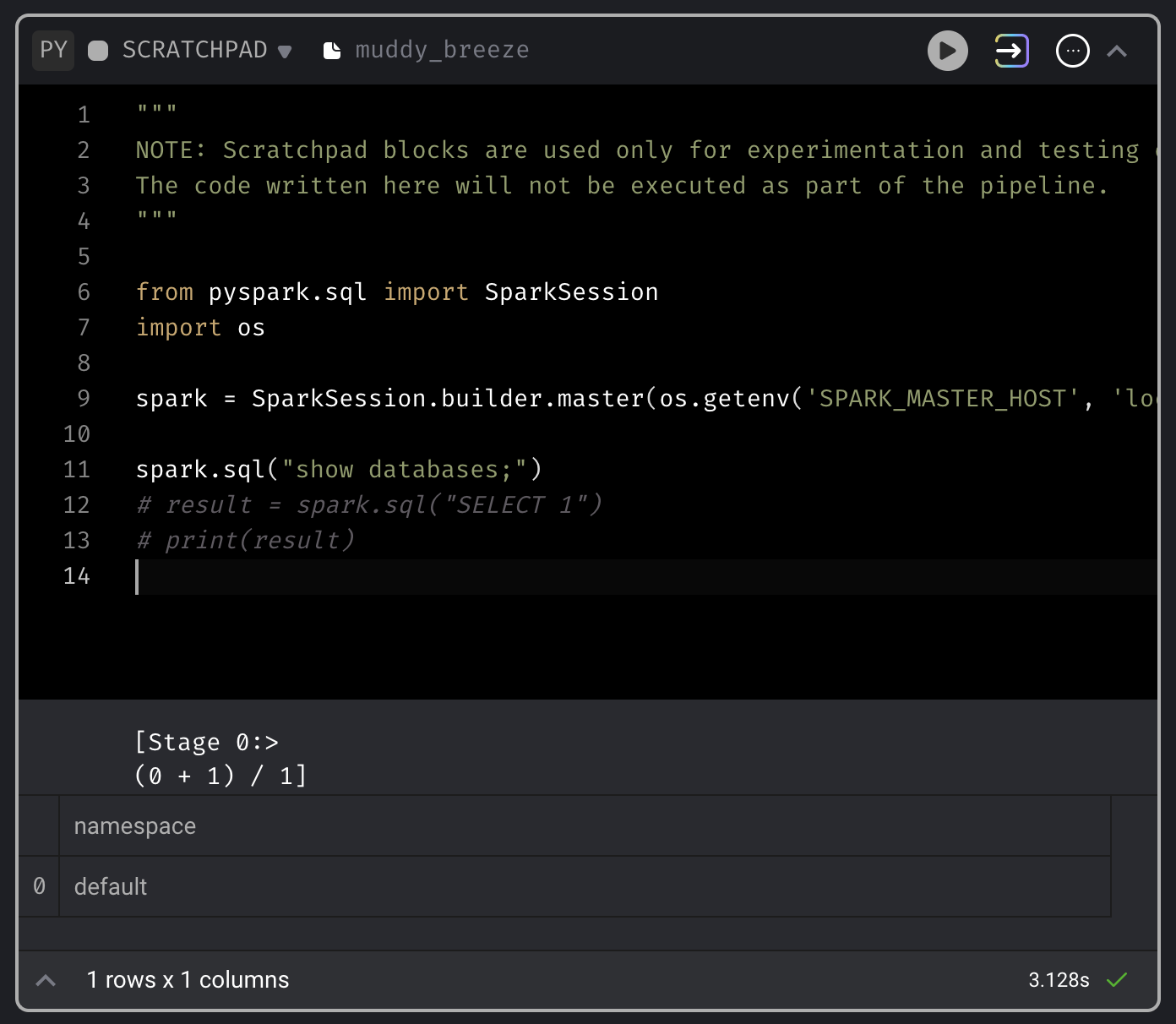

- Create a new pipeline with a name

dbt_spark, and add aScratchpadto test out the connection with PySpark, with the following code:

- Click the

Terminalicon on the right side of the Mage UI, and create a dbt projectspark_demo, with the following commands:

- On the left side of the page in the file browser, expand the folder

demo_project/dbt/spark_demo/. Click the file namedprofiles.yml, and add the following settings to this file:

-

Save the

profiles.ymlfile by pressingCommand (⌘) + S, then close the file by pressing the X button on the right side of the file namedbt/spark_demo/profiles.yml. -

Click the button

dbt model, and choose the optionNew model. Entermodel_1as theModel name, andspark_demo/models/exampleas the folder location. -

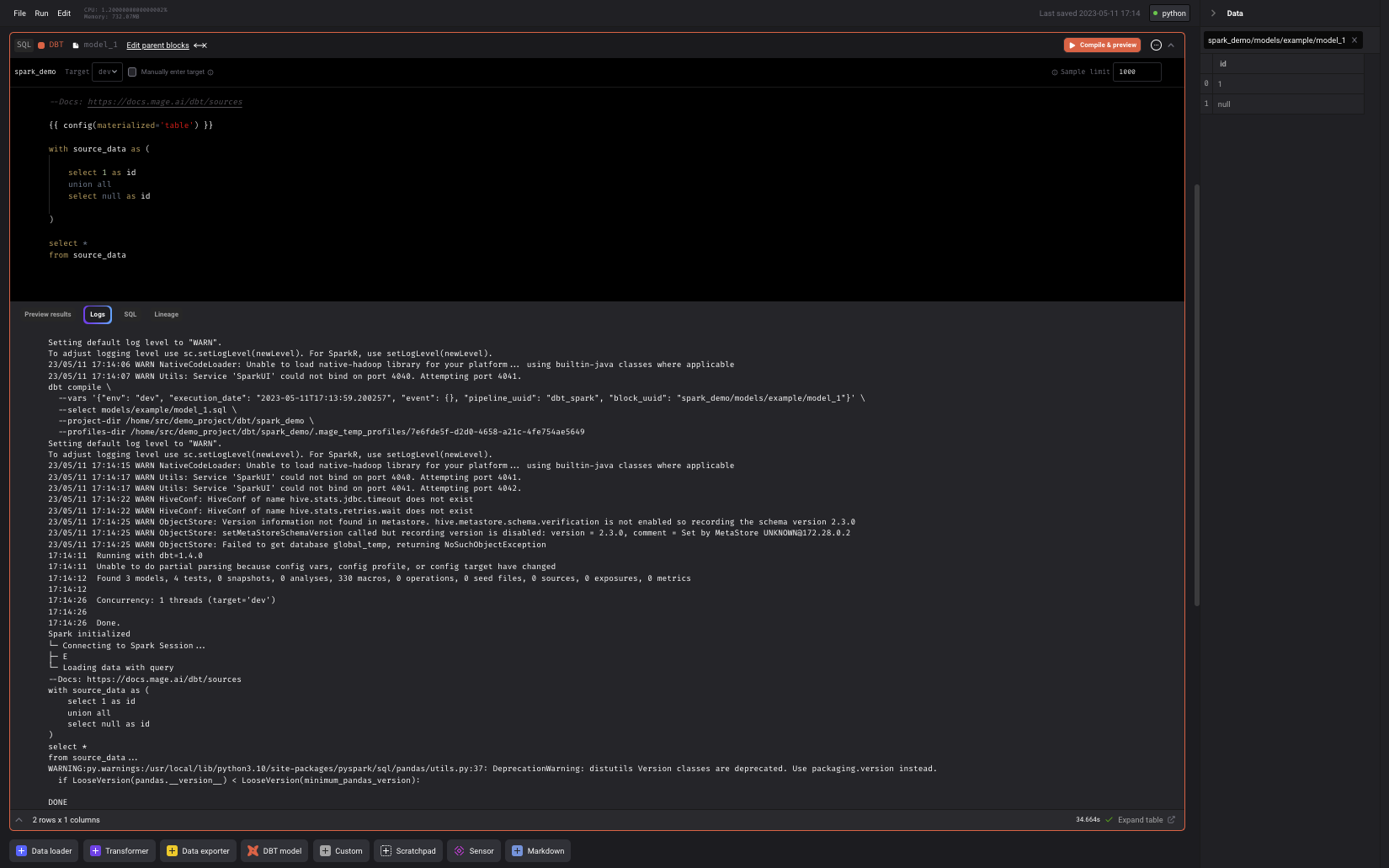

In the dbt block named

model_1, next to the labelTargetat the top, choosedevin the dropdown list. You can also checkManually enter target, and enterdevin the input field. -

Paste the following SQL into the dbt block

model_1:

Compile & preview button to execute this new model, which would

generate the results similar to the following: