Python3 kernel

Python3 is the default kernel. You can prototype and transform small to medium size datasets with this kernel. Pipelines built with this kernel can be executed in Python environments.PySpark kernel

We support running PySpark kernel to prototype with large datasets and build pipelines to transform large datasets. Instructions for running PySpark kernel- Launch editor with docker:

docker run -it -p 6789:6789 -v $(pwd):/home/src mageai/mageai /app/run_app.sh mage start [project_name] - Specify PySpark kernel related metadata in project’s metadata.yaml file

- Launch a remote AWS EMR Spark cluster. Install mage_ai library in bootstrap

actions. Make sure the EMR cluster is publicly accessible.

- You can use the

create_emr.pyscript under scripts/spark folder to launch a new EMR cluster. Example:python3 create_cluster.py [project_path]. Please make sure your AWS crendentials are provided in~/.aws/credentialsfile or environment variables (AWS_ACCESS_KEY_IDandAWS_SECRET_ACCESS_KEY) when executing the script.

- You can use the

- Connect to the remote spark cluster with command

ssh -i [path_to_key_pair] -L 0.0.0.0:9999:localhost:8998 [master_ec2_public_dns_name]path_to_key_pairis the path to theec2_key_pair_nameconfigured inmetadata.yamlfile- Find the

master_ec2_public_dns_namein your newly created EMR cluster page under attributeMaster public DNS

Metadata

When using PySpark kernel, we need to specify a s3 path as the variables dir, which will be used to store the output of each block. We also need to provide EMR cluster related config fields for cluster creation. The config fields can be configured in project’s metadata.yaml file. Example:Pipeline execution

Pipelines built with this kernel can be executed in PySpark environments. We support executing PySpark pipelines in EMR cluster automatically. You’ll need to specify the some EMR config fields in project’s metadata.yaml file. And then we’ll launch an EMR cluster when you executing the pipeline withmage run [project_name] [pipeline] command. Example EMR config:

Restart kernel

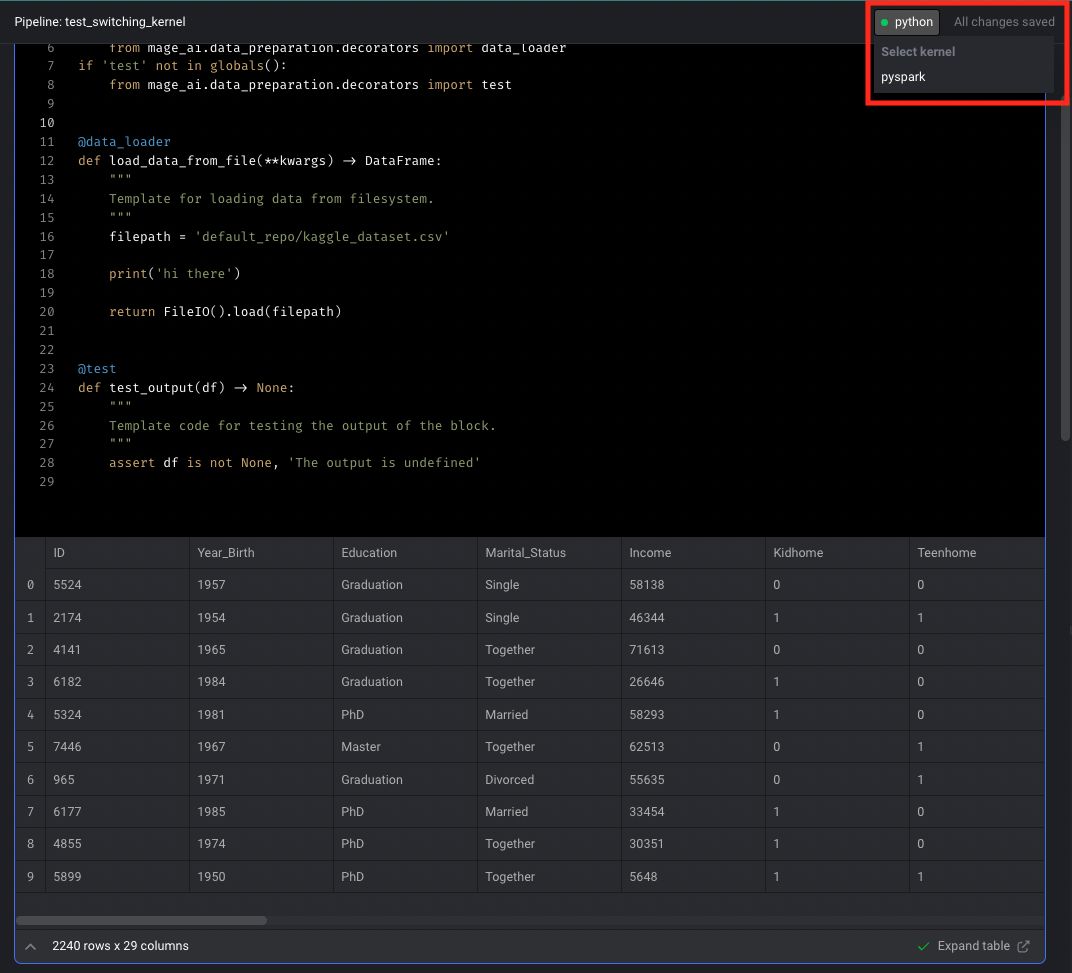

If your block execution is hanging in the notebook and no block output is printed, you can try interrupting the block execution and restarting the kernel. You can find the “Restart kernel” button in the “Run” menu at the top of the notebook.Switching kernels

To switch kernels, you first need to follow the steps in the corresponding section above. Then, you can switch kernels through the kernel selection menu in the UI. If you’re switching back to the Python3 kernel, you may need to undo the configuration changes needed for the other kernel types.