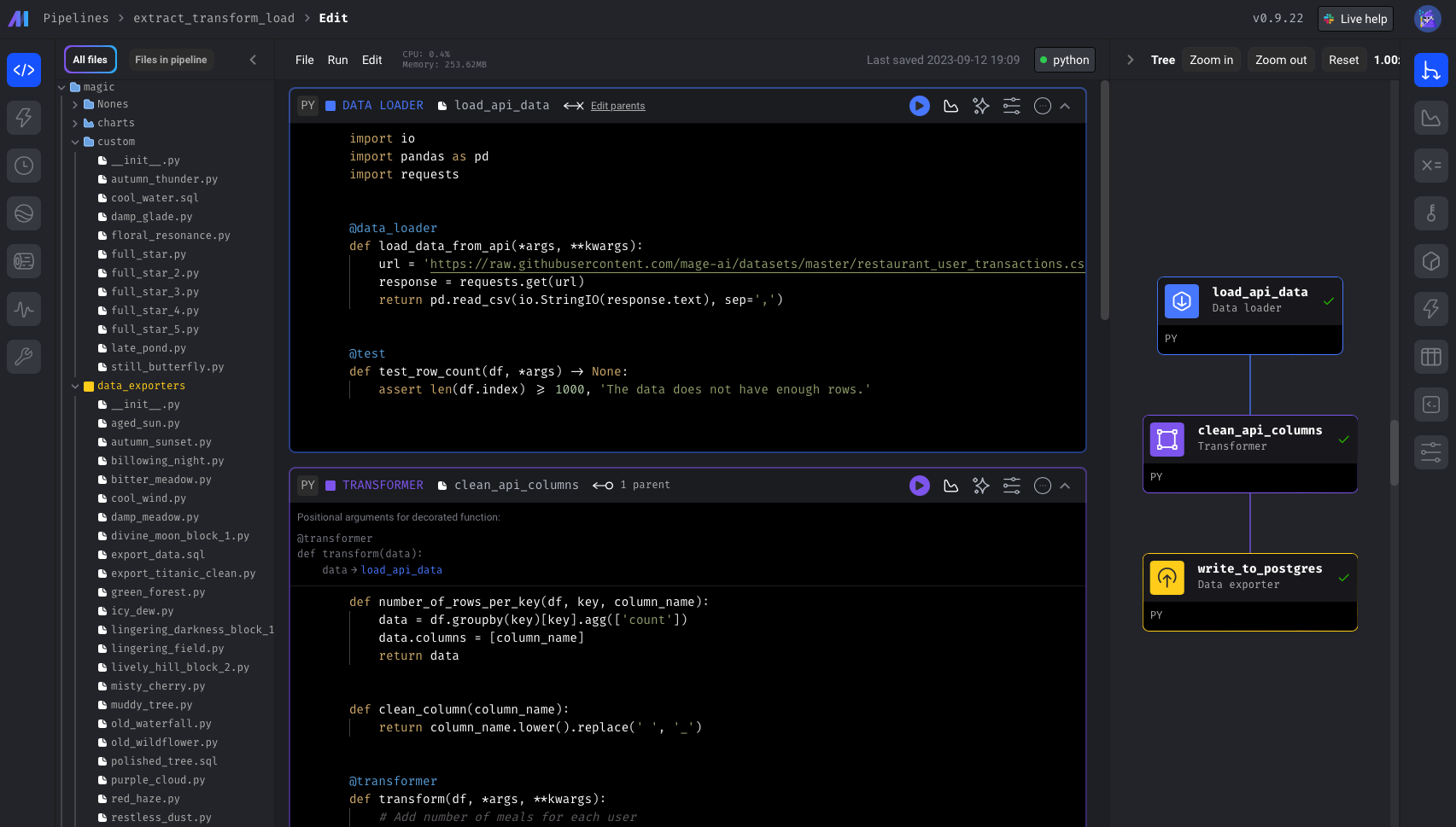

Create a new pipeline

Each pipeline is represented by a YAML file in a folder namedpipelines/ under the Mage

project directory.

For example, if your project is named demo_project and your pipeline is named etl_demo then

you’ll have a folder structure that looks like this:

demo_project/pipelines/ directory. Name this new folder after the name

of your pipeline.

Add 2 files in this new folder:

__init__.pymetadata.yaml

metadata.yaml file, add the following content:

Change

etl_demo to whatever name you’re using for your new pipeline.Sample pipeline metadata content

This sample pipelinemetadata.yaml will produce the following block dependencies:

metadata.yaml sections

Pipeline attributes

An array of blocks that are in the pipeline.

Unique name of the pipeline.

The type of pipeline. Currently available options are:

databricksintegrationpysparkpython(most common)streaming

Unique identifier of the pipeline. This UUID must be unique across all pipelines.

Optional description of what the pipeline does.

Pipeline level executor type. Supported values:

ecsgcp_cloud_runazure_container_instancek8slocal_python(most common)pyspark

Number of concurrent executors to run the pipeline. Used in streaming pipeline.

Optional configuration specific to the selected executor type.

Refer to the following documentation for executor-specific options:

Spark-specific configuration for PySpark pipelines. Mirrors the keys you

would normally pass to

SparkConf (e.g., spark_master, executor_env,

spark_jars).Retry configuration at the pipeline level.

See documentation for details.

Configuration for pipeline notification messages (e.g., on failure or success).

See documentation for details.

Concurrency settings for block execution within the pipeline.

See documentation for details.

block_run_limit: Maximum number of blocks that can run in parallel.pipeline_run_limitpipeline_run_limit_all_triggerson_pipeline_run_limit_reached

Whether to cache block output in memory during execution.

If true, runs all blocks in a single process or k8s pod.

Blocks that run after your main graph finishes (e.g., notifications, cleanup).

Same shape as items in

blocks.Conditional blocks that can short-circuit or branch execution. Same shape as

items in

blocks.Extension blocks (e.g., Great Expectations). Same shape as items in

blocks.Chart or reporting blocks shown in the pipeline UI. Same shape as items in

blocks.Pipeline-level settings; currently supports

triggers.save_in_code_automatically

to control whether new/updated triggers are persisted to YAML.Key/value pairs available to the pipeline for templating. Useful for

environment-specific values that are not secrets.

Configure an external state store for pipeline runs.

Free-form labels to organize and search for pipelines.

Identifier for the user or process that created the pipeline.

Environment-specific overrides for any top-level pipeline field. Mage Pro only.

See environment overrides for guidance.

Block attributes

An array of block UUIDs that depend on this current block.

These downstream blocks will have access to this current block’s data output.

The method for running this block of code. Currently available options are:

ecsgcp_cloud_runazure_container_instancek8slocal_python(most common)pyspark

Optional configuration specific to the selected executor type.

Refer to the following documentation for executor-specific options:

Programming language used by the block. Supported values:

python(most common)rsqlyaml

Unique name of the block.

The type of block. Currently available options are:

chartcustom(most common)data_exporterdata_loaderdbtscratchpadsensortransformer

type

is data_loader, then the file must be in the [project_name]/data_loaders/ folder. It can be

nested in any number of subfolders.An array of block UUIDs that this current block depends on.

These upstream blocks will pass its data output to this current block.

Unique identifier of the block. This UUID must be unique within the current pipeline. The UUID

corresponds to the name of the file for this block.For example, if the UUID is

load_data and the language is python, then the file name

will be load_data.py.Optional HEX color used to visually organize blocks in the UI.

Block-specific configuration. For integrations or dbt blocks this mirrors the

configuration visible in the block settings panel.

Markdown content rendered in the block’s documentation tab.

Optional priority for scheduling blocks when multiple are runnable.

Retry configuration at the block level.

See documentation for details.

Labels applied to the block for organization and filtering.

Maximum execution time for the block in seconds.